Architect’s View: Unpacking the Cloudflare Outages of Late 2025

In the world of distributed systems, there is an old adage: "It’s not if you fail, but how you fail."

Recently, Cloudflare—a backbone of the modern internet—stumbled twice in three weeks. On November 18 and December 5, 2025, they experienced significant outages that rippled across the web. As Solution Architects, reading their transparency reports (post-mortems) is better than a masterclass. It’s a chance to learn from the failures of a hyperscale system without paying the tuition ourselves.

Here is my breakdown of what happened, what went right, what went wrong, and the architectural lessons you should take back to your own teams.

1. The "Ghost Column" Incident (Nov 18, 2025)

The Trigger: A seemingly harmless database permission change. The Chain Reaction:

- Engineers updated permissions on ClickHouse (the DB used for analytics).

- A scheduled query used to generate a "Bot Management feature file" (a config file used by the WAF) began returning duplicate columns because it wasn't strictly filtering by database name (

defaultvsr0). - The resulting config file doubled in size, exceeding the hard-coded buffer limit in the proxy software.

- The Result: The proxy crashed or threw errors when loading the file. Because the bad file was generated intermittently (only on updated nodes), the outage fluctuated, mimicking a DDoS attack.

2. The "Killswitch" Incident (Dec 5, 2025)

The Trigger: An emergency config change to disable an internal testing tool. The Chain Reaction:

- Cloudflare was rolling out a buffer increase to mitigate a React vulnerability.

- They realized an internal testing tool couldn't handle the new buffer size.

- They used a Global Configuration system (instant propagation) to disable that tool via a "killswitch."

- The Bug: The killswitch logic for the specific action type (

execute) had a bug in Lua. It skipped execution but the code subsequently tried to access the result of that skipped execution (anilvalue). - The Result: Immediate HTTP 500 errors for ~28% of global traffic.

Architectural Lessons: What Can We Learn?

1. The "Fail-Open" vs. "Fail-Closed" Dilemma

In both incidents, the system encountered an unexpected configuration state (too big a file, or a nil object) and chose to crash or block traffic (Fail-Closed).

- The Lesson: For critical path components (like a reverse proxy), you must decide: Is it better to block legitimate traffic or let potential bad traffic through?

- The Fix: Cloudflare is moving to "Fail-Open" logic. If a Bot Management file is corrupt, the system should log an error and pass traffic without scoring, rather than dropping the request.

- Architect’s Advice: Wrap your critical configuration parsers in

try/catchblocks that default to a "known good state" (or the previous working config) rather than bubbling up a crash.

2. The Dangers of "Global" Anything

The Dec 5th outage was exacerbated because the change was pushed via a system designed to be fast (seconds to propagate globally).

- The Lesson: Speed kills. Even "emergency" switches need blast radius control.

- Architect’s Advice: Avoid "Big Red Buttons" that affect 100% of your fleet instantly. Even your killswitches should have a staggered rollout (canary -> region -> global), or at least a rapid sanity check in a staging environment.

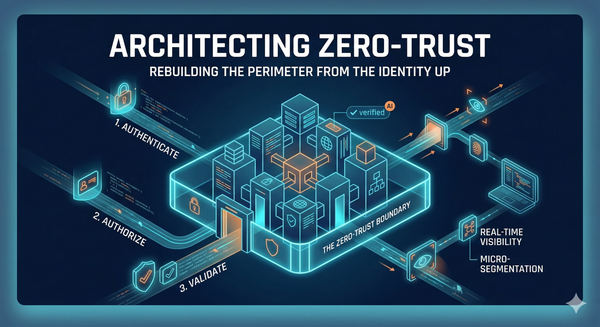

3. Trust No One (Not Even Your Own Database)

The Nov 18th outage was a classic input validation failure, but the input came from inside the house. The proxy software blindly trusted that the generated feature file would fit in its buffer.

- The Lesson: Treat internal configuration files with the same suspicion as user input.

- Architect’s Advice: Implement strict schema validation and size limits at the ingestion point. If the app expects a 1MB file and receives 2MB, it should reject the update and alert, not try to load it and crash.

4. Observability Can Be Misleading

On Nov 18, the outage "pulsed" (up and down) because only some database nodes were generating bad files. This gray failure mode led engineers to suspect a DDoS attack, delaying the real fix.

- The Lesson: Non-binary failures (gray failures) are the hardest to debug.

- Architect’s Advice: Correlate your error rates with your deployment/change logs immediately. If errors start exactly when a change (even a DB permission change) occurred, it's rarely a coincidence.

Scorecard: What Was Done Well vs. Not Well

What Was Done Well

- Transparency: Cloudflare’s post-mortems are the gold standard. They shared the exact code snippets (Lua) and SQL queries responsible.

- Rapid Revert (Dec 5): Once identified, the rollback on Dec 5 took only minutes (09:11 to 09:12).

- Migration to Rust: They noted that the type-safety features of Rust (which they are migrating to from Lua) would have prevented the

nilpointer error on Dec 5. This validates the architectural shift towards memory-safe languages for infrastructure.

What Was Not Done Well

- Internal Tooling Fragility: On Dec 5, the rollout was halted because an internal test tool broke. Your production rollout shouldn't be blocked by non-critical internal tooling failures.

- Status Page Coupling: On Nov 18, the status page showed errors (coincidentally), confusing the diagnosis. Your status page should be hosted on completely separate infrastructure (e.g., a different cloud provider) to ensure it survives your own outage.

- Implicit Assumptions in SQL: The query

SELECT * FROM system.columnswithout aWHERE database = ...clause is a technical debt timebomb that finally exploded.

Practical Checklist for Your Team

Based on these outages, run this quick audit on your current architecture:

- [ ] Config Safety: If your app loads a corrupted config file on startup, does it crash, or does it keep running with the last known good config?

- [ ] Gradual Rollouts: Do you have a mechanism to apply config changes to 1% of your users before 100%?

- [ ] Internal Inputs: Do you validate the size and schema of files generated by your own internal cron jobs/pipelines?

- [ ] Emergency Brakes: Do your "Killswitches" have their own safety latches? (i.e., Can a typo in a killswitch take down the site?)

Failures happen. The goal is to design systems that fail gracefully, not catastrophically.